Long time no see, or, from an Icinga developer’s view – too busy developing in order to write a blog post 🙂 Anyways, since this blog post isn’t about the upcoming Icinga 1.10 release (which is due on OSMC this year including live demos during our presentation) I’ll skip the 1.x part here. Just a personal note to all those “2.x means 1.x development is dead” opinion makers – you’re wrong. Look at the roadmap or get in touch with your ideas for 1.x, tell the team about your concerns and help out even!

If you remember the 0.0.2 technology preview release of Icinga 2, the overall idea was to provide a feature equivalent version of what Icinga Core 1.x now provides for your monitoring. That included a compat component, writing status.dat/objects.cache/icinga.log serving necessary data for the Classic UI to be run standalone with Icinga 2. Livestatus was somehow prototyped but not yet ready, and neither was the IDO component as compatible database backend.

We have our regular development meetings on the Icinga 2 status where we also discuss the progress and to-dos. After 0.0.2 we decided to split to-dos into finishing the data providers for the LivestatusListener while designing and implementing a completely new IDO database component – only keeping the existing database schema (1.10 to be exact). We’ve also decided to keep the name “IDO” for now- even if some people tend to generally blame it for bad performance. The Icinga 2 IdoMysqlConnection works as a single connection firing database queries from received framework events. It’s backed by a generic library named “db_ido” which knows about the database table relationships and objects. That way it will be easier to add more databases than just MySQL in the future. Please keep in mind that we might have our own database backend schema somewhere in the future – that’s just not designed or prototyped yet. Having the IDO schema compatibility on-board enables us to offer support for our very own tool stack (Web, Reporting) as well as other addons (NagVis, etc).

Once we finished the config and status data providers for LivestatusListener and IdoMysqlConnection in late summer we’ve bashed our head up against ‘historical’ issues. While it seems reasonable to just subscribe to all the event signals happening and stash them into logs or a database backend, there were 2 problems with that: The native livestatus module in 1.x loads icinga.log and archives into core memory and filters that to receive log and statehistory data. That method is pretty ugly but given no other backend (a database) it seems reasonable – at least for the moment. The second problem was more generic – Icinga 2 will have cluster and distributed features built-in. This seriously changes the way data is being processed or synchronized, different to just a single standalone instance.

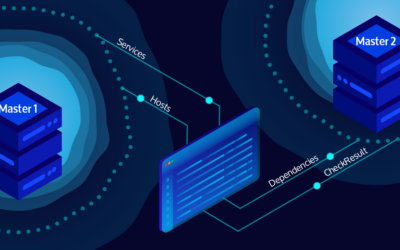

Furthermore we weren’t satisfied with the built-in replication on cluster setups that require all instances to ‘see’ each other. In an ideal world that would work, but in custom environments with different network segments, policies, firewalls it will become pretty easy “does not work ™”. Since the historical data depends on the events a cluster with more than just one node would generate, we’ve had several meetings and lots of discussions ever since. The most significant change to the existing implementation is now that we have a) sort of bin-log replay messages to keep nodes in sync b) if enabled, a master node syncs config parts to the slave node (which must accept configs from that specific node). They’re trusting themselves based on ssl certificates and local configuration. It’s even possible to have a “slave” node as dumb checker (like a mod_gearman worker) and sending data back to more than one “master” node. Since that’s a special case, the more interesting part is that on connection loss, the slave instance will continue to do what it’s gotta do (check services) and on connection restore, it will sync all the data back to the master.

You may find similar approaches with the LConf distributed mechanism done with 1.x, or mod_gearman workers but now built-in into Icinga 2. At the time of writing the code is being run on our test labs in pre-production, standalone and also clustered using live config converted by the script provided by Icinga 2. Regarding check delegation – the instances will calculate by themselves who’s responsible for the current check. Meaning to say, there’s only an internal cluster heartbeat getting all nodes alive, but no broadcast message on “who could do the check?”. That can be stacked into more levels of clustering, leaving the instance in the middle as proxy node.

If you’re asking yourself – the connection between nodes can be established bi-directional. A slave may connect from the local DMZ to the master listening on e.g. port 7777, or a master may connect to a slave listening on e.g. port 7778. And it’s not really master/slave by default – those identifiers originate from the setup you’re designing. They may just be Icinga 2 instances working in a distributed cluster setup. Or, and that’s one of the “oh, this is fucking great” ideas during discussions, an Icinga 2 agent shall be an Icinga 2 mini-instance with local configuration and its own check scheduler, either sending back the data or being polled by the Icinga 2 “master” node. The main difference to the clustered instance would be the non-persistent connection. That sounds great but isn’t implemented yet 🙂

Under normal circumstances you won’t need the cluster distributed magic because Icinga 2 will use all available cpu cores to fire checks, notifications, eventhandlers in a non-blocking, multi-threaded way. So basically if you’re up to like 100k checks now, you should try the config conversion script and fire up a single Icinga 2 instance (and please don’t use a vm with 1 cpu and 1gb ram then 😉 ).

Given the way checks and data can be distributed is now working as redesigned, we’ve again evaluated our to-dos. We’re planning to release 0.0.3 at OSMC in ~3,5 weeks and therefore we need to bring everything into shape while finishing up with the rest. The IDO database backend now received all the historical data required for Web/Reporting, plus a timer-based cleanup mechanism in order to delete table entries older than XYZ_age (e.g. notifications, downtimehistory). Documentation will be provided using markdown, and packages (deb, rpm) are in the making as well. The installation is being revamped and updated – to note, there will be “i2enfeature compat” to automatically enable/disable feature components within Icinga 2 (thanks Debian and Apache for the idea). Furthermore, we’ve done a final review of the configuration itself and corrected some parts with duplicated information or wrong naming (i.e. a host’s hostcheck is now a host’s check, and a notification requires user_groups, not just groups)

In the end, Icinga 2 0.0.3 will feature

- StatusDataWriter and ExternalCommandListener (former Compat) and CompatLogger (former CompatLog) for status.dat/objects.cache/icinga2.cmd/icinga.log for Icinga 1.x Classic UI

- IdoMysqlConnection and ExternalCommandListener for Icinga 1.x Web

- IdoMysqlConnection for Icinga 1.x Reporting, NagVis

- LivestatusListener for addons using the livestatus interface (history tables tbd)

- PerfDataWriter for graphing addons such as PNP/inGraph/graphite (can be loaded multiple times!)

- CheckResultReader to collect Icinga 1.x slave checkresults (migrate your distributed setup step-by-step)

- template focused configuration language, backed with the conversion script for Icinga 1.x configuration

- base feature-set of Icinga 2 as monitoring framework itsself

We need feedback from your tests with

- Installation (Source, Packages tba)

- Config conversion script

- Configuration syntax and handling (create your own from scratch)

- Features available in Icinga 1.x we might have missed

Our presentation on OSMC will include a live hands-on with all the mentioned features, especially to note the cluster functionality. Join us there for discussion & beer, or hop onto the community channels where developers lurk as well 🙂

Thanks for testing in advance!